CLUE Enhancements

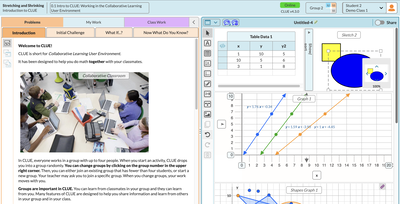

CLUE is the Concord Consortium's Collaborative Learning User Environment, a multi-purpose web application that is built around the idea of student collaboration. CLUE does not have a single entrypoint; rather, student- and teacher-ready "units" are set up with embedded curriculum content and a configuration of the possible features and tools; these can be quite varied. The MothEd units use CLUE as a data collection, sharing, and analysis platform for middle school life sciences; The Connected Math Project uses it for inquiry-based math; and the Mobile Design Studio for engineering design.

CLUE includes a wide range of tools that can be included in units, including:

- Tables

- Graphs

- Geometrical constructions

- Sketches

- Embedded simulations

- Connections to sensors and actuators

- Block programming

My Role: As Principal Engineer I created or updated several of the CLUE tools:

- Developed a Bar Graph tool from scatch, using the visx and D3 libraries.

- Rewrote the SVG-based Sketch tool, adding features such as scaling, zooming, grouping, reflection and rotation.

- Enhanced the Graph tool to allow multiple datasets to be combined into one graph, and new data to be created by manually creating points.

- Added the ability to create "Questions", which group multiple tiles together as a question and answer.

- Collaborated to rewrite the research reporting system that compiles reports from the stored event logs of all users' actions (this system includes reports from CLUE and other CC tools)

Technologies: Typescript, Mobx, Mobx State Tree, JSON, Firebase. For sketches and graphs: SVG. For bar graphs: visx and D3. For geometry tool: JSX Graph. The reports server is written in Elixir and uses AWS Athena, SQL, S3, and Glue. For AI Evaluation: ChatGPT, OpenAI API, and Firebase functions.

AI Evaluation: Along with adding and refining the student-facing tools, I spearheaded the work to add an AI evaluation subsystem. This was part of the Mobile Design Studio project which uses CLUE to introduce students to the practices of engineering design. We added a back-end system that would automatically take student documents (commonly, this would be a set of sketches and text descriptions) and ask an OpenAI large-language model to determine, from a screenshot of the student work, which aspect of design they appeared to be focusing on—the system's function, form, environment, or the user. This was then used to provide feedback ot the student and the teacher, and ultimately provide suggestions and hints to encourage students to view their work from all of these viewpoints.